-

I want to try it once.

-

https://www.imagazine.co.jp/gan%EF%BC%9A敵対的生成ネットワークとは何か%E3%80%80%EF%BD%9E%E3%80%8C教師/

- z (noise) is like a seed value.

- When you change the seed value, the image gradually changes.

- It’s often seen in images generated by GAN.

Understanding the Basic Structure of GAN (Part 1) - Qiita

- It feels like the target readers are right in the middle.

- Discriminator

- Well, this feels like a typical (?) classification problem with a Neural Network.

- Generator

- It performs upsampling.

- Instead of using reverse CNN, it simply reshapes the values.

- Reverse CNN is used in something called DCGAN (blu3mo).

- Training

- “Train only the Discriminator using real data.”

- “Train the Generator using the Discriminator.”

- They seem to alternate between these two steps.

- Loss Function

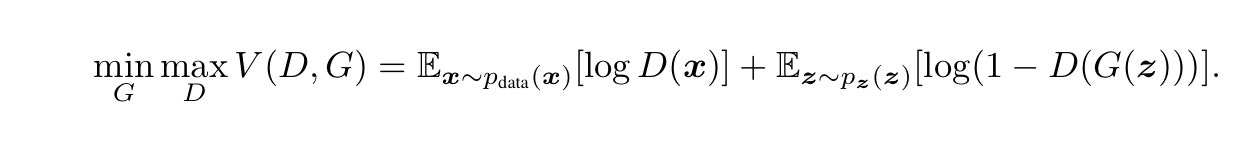

- I don’t understand the meaning of .

- In the training of the Discriminator, we want to maximize V(D, G).

- We want to maximize = make it output 1 when it’s real data (x).

- We want to maximize = make it output 0 when it’s fake data (G(z)).

- In the training of the Generator, we want to minimize V(D, G).

- We want to minimize = make it output 1 when it’s fake data (G(z)).

- In other words, we train in the opposite direction of the Discriminator.