from 東大1S情報α Task 2: Training CNN on CIFAR10

- Initially tried with around 5 layers

- Accuracy: .375

- Increased to 12 layers

- Accuracy: .325

- Oh, it got worse

- Tried 5 layers with 60 parameters

- Accuracy: .410

- It improved

- What if we increase the size of the parameters even more?

- Tried with 120 parameters

- Oh, the training accuracy increased but the generalization performance is not good

- Well, that makes sense. Increasing parameters increases the capacity to represent things

- The problem now is how to improve generalization (blu3mo)(blu3mo)

- Tried with 120 parameters

- Reduced the number of parameters in the last layers

- Maybe it will put more pressure on generalization in the last layers?

- Not much difference

- Tried doing more convolutions

- The accuracy didn’t improve much, but the training and validation graphs became similar

- In other words, we have achieved good generalization, so we just need to increase the model’s capacity to represent things

- Tried adding more Dense layers

- It didn’t work

- It stopped around .35 accuracy

- Tried adding more Conv layers

- Hmm, it didn’t work well

- Changing the activation function didn’t make much difference

- The problem might be that the number of filters in Conv is 1

- With this number of parameters and output, it is too small

- Here it is (blu3mo)(blu3mo)(blu3mo)(blu3mo)

- This is it

- Without increasing the parameters in Conv, we can’t increase the capacity to represent things (blu3mo)

- Then, let’s add more Dense layers

- As expected, the two graphs diverge

- I’m starting to understand (blu3mo)

- Tried various things, but couldn’t surpass the 0.7 accuracy barrier

- If we increase the capacity, the training accuracy almost reaches 1, but the validation accuracy remains at 0.7

- Tried reducing the number of layers?

- It improved slightly, and it ended much faster

- Improved slightly by using LeakyReLU

- https://qiita.com/koshian2/items/e66a7ee9bf60ca24c940

- It seems that gradually increasing the kernel size is better

- Indeed, when you think about it, it makes sense

- After obtaining detailed features, we should obtain larger features

- But it didn’t improve much

- It seems that gradually increasing the kernel size is better

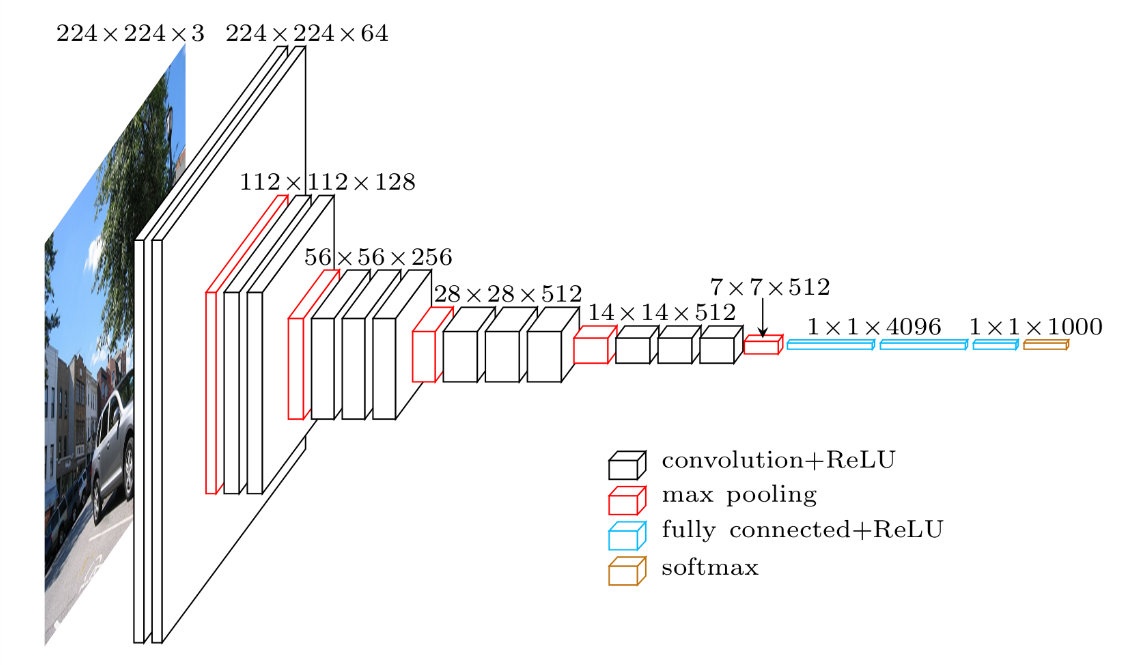

- Tried mimicking VGG16

- It seems to have a structure that focuses on convolutions, and brings in two large Dense layers at the end

- I remembered the image of compressing the dimensions of the image and making it one-dimensional (blu3mo)

- It clearly exceeded 0.7 accuracy (blu3mo)(blu3mo)(blu3mo) _

Input(shape=input_shape),

Conv2D(64, kernel_size=(3, 3)), activation,

Conv2D(64, kernel_size=(3, 3)), activation,

MaxPooling2D(pool_size=(2, 2)),

Conv2D(128, kernel_size=(3, 3)), activation,

Conv2D(256, kernel_size=(3, 3)), activation,

MaxPooling2D(pool_size=(2, 2)),

Conv2D(256, kernel_size=(3, 3)), activation,

Conv2D(512, kernel_size=(3, 3)), activation,

#MaxPooling2D(pool_size=(2, 2)),

Flatten(),

Dense(2000), activation,

Dense(2000), activation,

Dense(output_dim, activation = "softmax")

- Tried changing Dense(2000) to three, or increasing the number of Conv layers or filters, and it resulted in the following:

-

- It's the one we did in class

- Tried various things, but couldn't find anything better than [[情報α課題2: CIFAR10でCNNを訓練#627faf6e79e1130000a462d4|627faf6e79e1130000a462d4]]

- Adjusted the batch size

- [Adjusting batch_size](https://teratail.com/questions/246310)

- Oh, reducing the batch size actually takes more time

- Improved slightly when set to 1024

- I wonder why?- I thought it would be difficult to generalize, but...

- 1000: Stable at .75 validation accuracy.

- It doesn’t go beyond that, it seems.

- If you want to improve generalization, you can try Data Augmentation or Dropout.

- Dropout

- Set it to .2 in three places.

- Oh, looks good.

- Let’s increase it further.

- It’s stuck at .77.

- If increasing Dropout improves generalization, it might be good to increase the number of parameters and layers at the same time.

- Set it to .2 in three places.

- Dropout

-

Conv2D(128, kernel_size=(3, 3)), activation, Dropout(.4), Conv2D(128, kernel_size=(3, 3)), activation, MaxPooling2D(pool_size=(2, 2)), Conv2D(128, kernel_size=(3, 3)), activation, Dropout(.4), Conv2D(256, kernel_size=(3, 3)), activation, MaxPooling2D(pool_size=(2, 2)), Conv2D(256, kernel_size=(3, 3)), activation, Dropout(.4), Conv2D(512, kernel_size=(3, 3)), activation, GlobalAveragePooling2D(), Dense(2000), activation, Dropout(.4), Dense(2000), activation, Dense(output_dim, activation = "softmax")- With this, it reaches around .8 accuracy.

- Data Augmentation

- Tried rotation and flip.

- Oh, the training and validation accuracies are the same.

- Good (blu3mo) (blu3mo)

- Just need to increase the number of epochs or improve expressiveness.

- Data augmentation, maybe vertical flip is unnecessary?

- Referring to https://www.cs.toronto.edu/~kriz/cifar.html

- Not much difference.

- This is how it looks if you keep going.

- Tried increasing the batch size to 2000.

- Not much difference.

- Removed RandomRotation and it improved further.

- However, it sometimes falls behind in training.

- Maybe the way rotation is done was not good? (blu3mo)

- I think this black area was not good.

- Fixed it ✅

- Tried gradually increasing the kernel size.

- Wow, it looks like this after 60 iterations.

- Can we expect this linear growth to continue?

- Well, it seems like it’s actually getting worse.